📝 After completing this theory chapter, you should be able to:

- Describe how companies and users historically moved from server rooms to datacenters to cloud solutions, with TCO in mind as well

- Contrast on-premise with cloud computing

- Explain the tier system as explained by the Uptime institute

- Describe 'redundancy'

- Give examples of large data centers in Belgium

- Identify and explain the positives and main drawbacks public cloud and the same for private cloud

- Identify why someone would use private cloud instead of public cloud or vice-versa.

- Explain what load balancing, scalability and elasticity is

- Describe how HA and DR have their place in high reliability

- Explain the basic security measures that go into providing confidentiality and integrity

- Describe and give Azure examples of the three main IT as a service models

- Explain and contrast the three delivery models of cloud computing given in the course

- Describe some of the main public cloud providers: AWS, Azure and GCP

- Explain key concepts of Azure: Regions, Zones, Subscriptions, Resource groups

- Explain the basic factors on which Azure charges their users: Service type, usage, location, performance tier

- Describe where and how you can check these charges and upcoming costs in Azure

- Describe how you can optimize and reduce these charges

- Give examples of core Azure Services & related use cases

- Describe the basic process of making a VM on Azure and the connection options to install software on it

Moving from On-Premise to the Cloud, and Why?

Coming from the days from servers in small rooms, a lot of companies have moved to the Cloud for their storage and computing needs. But why?

From on-premise server rooms

In the not-so-distant past, organizations relied heavily on on-premise infrastructure to store, manage, and process their data and applications. In other words, creating a server room on the company's premises, i.e. on-premise.

This approach came with several drawbacks such as:

- 💰 High upfront costs: On-premise infrastructure requires a large initial capital expenditure to acquire the hardware and software licenses, as well as the space and power to operate them. These costs can be prohibitive for small or medium-sized businesses, or for startups that need to test and iterate quickly.

- 🔧 Extensive maintenance: On-premise infrastructure requires constant monitoring, patching, updating, and troubleshooting by the user to ensure its availability and performance. This also entails hiring and training IT staff, who may not have the expertise or resources to keep up with the latest technologies and best practices.

- 📉 Limited scalability: On-premise infrastructure has a fixed capacity, which means it cannot easily accommodate sudden spikes or drops in demand. Scaling up or down often requires buying or selling hardware, which can be time-consuming, costly, and wasteful.

- 📵 Low reliability: On-premise infrastructure is vulnerable to failures, outages, and disasters that can disrupt the business operations and cause data loss. Recovering from such incidents can be expensive and time-consuming, and requires additional backup and recovery systems to prevent.

- 🔓 Full security responsabilities: On-premise infrastructure is equally exposed to various cyber threats, such as malware, ransomware, phishing, and denial-of-service attacks, that can compromise the confidentiality, integrity, and availability of the data and applications. Protecting the infrastructure from these threats requires investing in security software, hardware, and personnel, and complying with various regulations and standards which can also quickly get expensive.

For example: a small e-commerce company 🛍

Imagine a company that runs an e-commerce website on its own servers. The company has to estimate the amount of traffic and transactions it will receive, and buy enough hardware and bandwidth to handle the peak demand. If the demand exceeds the capacity, the website may crash or slow down, resulting in lost customers and revenue. On the other hand, if the demand is lower than expected, the company may end up wasting resources and money on unused infrastructure.

In conclusion, setup required substantial investment in physical servers, networking equipment, and skilled personnel to maintain and upgrade the infrastructure but was often the only choice.

To building your own datacenter

As the demand for data storage and processing increased, many large companies realized that having a 'server room' was not enough to meet their needs. Many companies even ended up having multiple server rooms scattered across their buildings.

It seemed a better idea to centralize all of this separate equipment into a datacenter, a dedicated facility that hosts hundreds or thousands of servers, along with power, cooling, security, and backup systems.

This enables these companies to reduce the costs of setting up multiple small server rooms by centralizing their IT infrastructure and reduce costs by scale.

Of course such a scale also brings along bigger needs around aspects like ⚡ power, ❄ cooling or 🧯 security & safety to ensure a certain level of 📈 reliability and resilience

⚡ Power

With many of the company's servers centralized into one datacenter, it requires a lot of electrical power to operate efficiently. All of this power can be distributed safely using Power Distribution Units (PDUs). PDUs distribute power to various equipment within the datacenter, helping to optimize energy consumption and prevent overloads.

On top of that all those servers are impacted when power fails. That is why datacenters usually build additional fallback measures against this, such as:

- Diesel Generators: These generators can start within seconds of a power outage, providing power instead of the normal grid, ensuring continuous operation.

- Uninterruptible Power Supplies (UPS): UPS systems provide temporary power during outages, allowing for a smooth transition to backup (diesel) generators and preventing data loss, ensuring that services remain uninterrupted even during power fluctuations. The simplest form of these UPS systems are batteries.

❄ Cooling

In a datacenter where all the company's servers are centralized, significant heat is generated as well.

Effective cooling is essential to maintain optimal operating conditions, centered around three main aspects:

- Containment: Hot and cold aisle containment systems help manage airflow, preventing hot and cold air from mixing and improving cooling efficiency.

- Natural Cooling (Passive): Passive cooling methods utilize natural resources to cool the datacenter. For example, Facebook’s datacenter in Luleå, Sweden, uses the cold Arctic air for natural cooling, significantly reducing their reliance on mechanical cooling systems.

- Mechanical Cooling (Active): Active cooling systems, such as air conditioners and chillers, are used to maintain the desired temperature and humidity levels within the datacenter. Data centers use advanced CRAC systems to maintain optimal temperature and humidity levels, ensuring that their servers operate efficiently.

🧯 Security & Safety

With all of a company's servers centralized into one datacenter, there is a higher need for physical security and safety measures:

- Physical Security: Implementing access control systems, surveillance cameras, and security personnel to prevent unauthorized access. For example, many data centers are equipped with mantraps.

- Fire Safety and Precautions: Installing fire suppression systems to protect equipment from fire damage is essential. Gas-based extinguishing systems are important because they can suppress fires without damaging sensitive electronic equipment. Microsoft uses advanced fire suppression systems, including gas-based extinguishing systems, to protect their equipment from fire damage without harming the servers.

📈 Reliability and resilience

All of this leads to a certain level of the reliability and resilience of the datacenter infrastructure. Data centers are classified into tiers, based on the level of redundancy and availability of their systems.

What is redundancy? ⚡

Redundancy is the practice of duplicating critical components or functions in a system to enhance its reliability and availability. For example, a datacenter may have redundant power and cooling systems, so that if one fails, the other can take over and prevent downtime. You can learn more about datacenter redundancy from these sources: Datacenter Redundancy: N+1, 2N, 2(N+1) or 3N2 (distributed) or What is datacenter Redundancy? N, N+1, 2N, 2N+1

Not all organizations need the same level of redundancy, which is why there are different tiers to choose from, based on a tier-system by the Uptime institute. However, organizations should be careful not to under-invest or over-invest in their datacenter tier, as this could affect their business continuity and profitability. Here are the main characteristics and expected uptime of each datacenter tier:

- Tier 1 data centers have a single path for power and cooling, with no redundancy. They have an expected uptime of 99.671% (28.8 hours of downtime per year).

- Tier 2 data centers have a single path for power and cooling, with some redundant components. They have an expected uptime of 99.741% (22 hours of downtime per year).

- Tier 3 data centers have multiple paths for power and cooling, with one path active and one path on standby. They can perform maintenance and upgrades without disrupting the service. They have an expected uptime of 99.982% (1.6 hours of downtime per year).

- Tier 4 data centers have multiple paths for power and cooling, with both paths active and fully redundant. They can withstand any single point of failure without affecting the service. They have an expected uptime of 99.995% (26.3 minutes of downtime per year).

If you're looking for some more optional footage, watch 📺 this in-depth tour through a datacenter.

Ending with new business models and Cloud

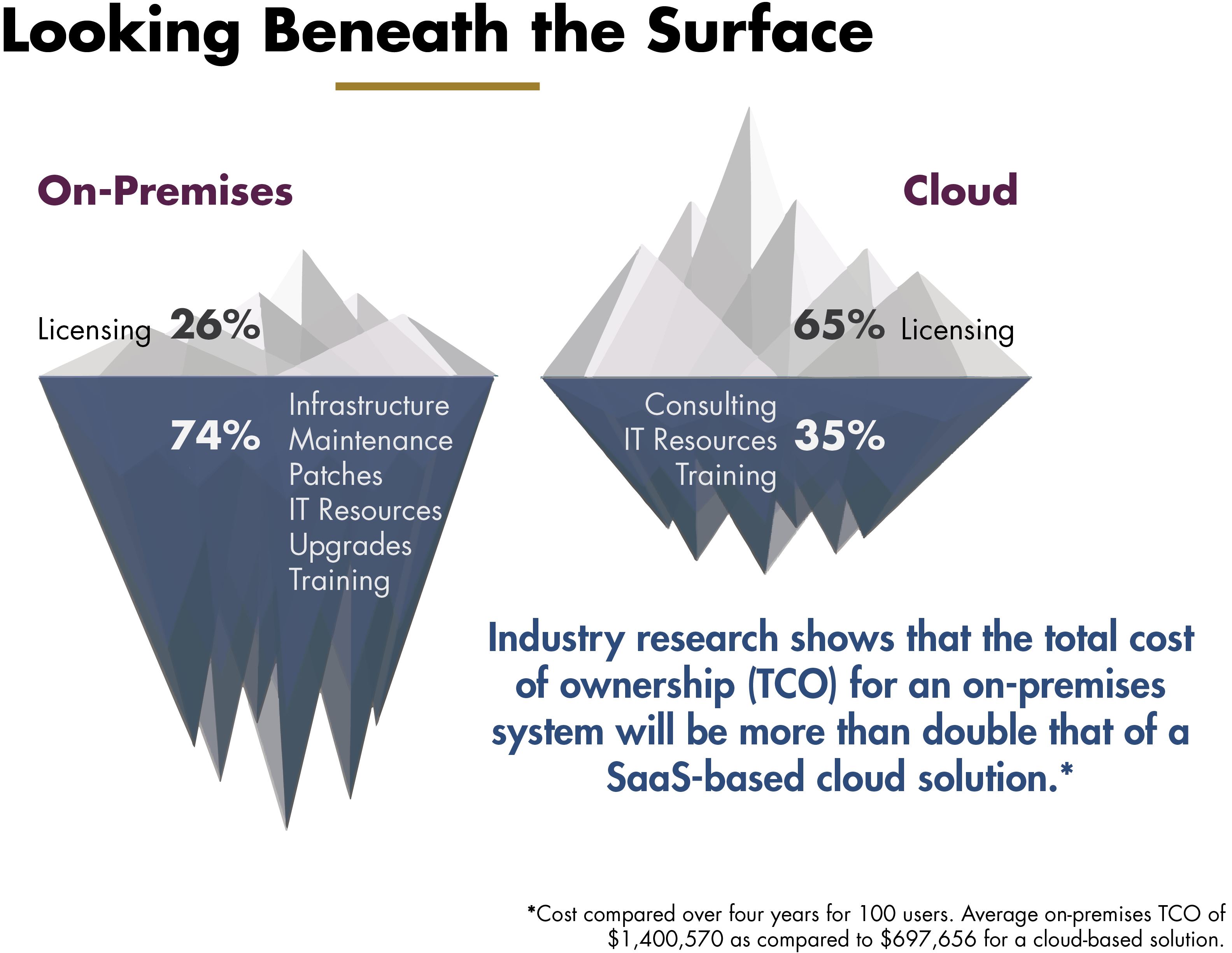

When datacenter expertise matured, companies began to recognize the value in new business models based on the datacenter. This became a feasable business model especially because the total cost of ownership (TCO) of a datacenter entirely owned by the company can be still be too high for many of those enterprises.

🗄 Co-location datacenters

A first business model is centered around companies offering parts of datacenters for rent to other organizations.

These companies were created with the goal of building and operating co-location data centers. They are called co-location because many companies and providers share one datacenter. Customers can then rent a part of that datacenter, and then bring their own equipment to the rented rack space. The company running the co-location datacenter takes care of power, cooling, connectivity etc. Some companies starting with this business model were in Belgium InterXion (now markted under Digital Realty) or LCL.

In time companies started to think about taking care of more and more themselves, so customers do not need to deal with anything hardware-related anymore. Customers could just focus on installing operating systems, software, configuration, etc. on hardware that was taken care of for them.

This lead to the emergence of cloud business models like 🎩 Private cloud and 🌎 Public cloud, offered by companies called Cloud providers.

Cloud computing may seem like a magical and abstract concept, but in reality, it is also powered by physical infrastructure. This physical infrastructure could be a datacenter fully owned by a cloud provider, or a part of a co-location datacenter rented by the cloud provider.

🎩 Private cloud

As we just talked about, Private cloud is essentially centered around companies building data centers or renting large parts of co-location data centers and then simply offering hosting to other companies alongside management services. This hosting can go from databases to applications.

One example of these providers in Belgium is RCloud from Inetum RealDolmen, who rents space at both LCL and InterXion Digital Realty, to offer their services.

✅ Private cloud especially offers:

- privacy, as the customer can ensure their data is on servers in a specific country and location to abide by specific laws. The customer knows the exact location of the data, application, ... they hosting for

- customization, as the customer can call their personal contact at the cloud provider to ask for more custom setups that are outside of the standard menu.

- access, as some private cloud providers allow the customer to send their own engineer to work on the servers on-site.

⚠ Yet this makes private cloud providers often slower to change setups,, less cost-effective and have a lower variety of services.

Watch this video of the co-location datacenter provider in Belgium, InterXion-DigitalRealty's Zaventem datacenter in a 📺 video (English subtitles) by Combell, who also provides cloud services out of this datacenter.

🌎 Public cloud

You have probably already heard about cloud providers such as Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), and IBM Cloud as well. These cloud providers have many self-built data centers all over the world, where they store and run their servers, storage, networks, and software while giving users access over the internet. On top of that these datacenter are very large, leading to them being called hyperscale.

This huge scale has allowed these companies to offer cloud services much like public transportation, hence Public cloud. Customers now rent out computing, storage or hosting capacity while not knowing the exact physical location of the servers and rack where their systems or applications are hosted on.

⚠ Here this often means that:

- privacy laws can become a problem because you cannot guarantee that certain data will always just be in one country

- you have to stick to the menu and you do not have the ability to call someone at the provider to create a custom setup or package for you

- the customer cannot send their own engineers to work on-site

✅ On the other hand public cloud offers the customer a quick and easy start for their first cloud service, more cost-effectiveness, and a larger variety of services because public cloud providers can do more for less money thanks to their scale.

🌐 Hybrid cloud

Hybrid cloud is the most flexible and balanced delivery model, where cloud services are provided by a combination of public and private clouds, and are integrated and coordinated through a common platform. Hybrid cloud tries to offer the best of both worlds, allowing users to leverage the advantages of each cloud type, and mitigate the disadvantages of each cloud type. An example of this in Belgium is Cegeka.

These are some of the key service and delivery models that characterize cloud computing, but there are also other models that extend or modify them, such as Community cloud, Multi-cloud, and Edge cloud. As we said before cloud computing is a diverse and dynamic field that is constantly evolving and adapting, as new user needs and preferences emerge and new market opportunities and challenges arise.

Cloud computing vs. On-Premise

In summary, cloud computing allows users to access resources, such as servers, storage, databases, analytics, and software, without having to own or manage them. These benefits for the user often neatly counter the drawbacks of the classic on-premise server room:

- 💸 Low or no upfront costs: Cloud computing follows a pay-as-you-go model, where users only pay for the resources they consume, and nothing more. This eliminates the need for large capital investments, and reduces the operational expenses and risks associated with owning and managing infrastructure.

- 🏝 Minimal maintenance: Cloud computing shifts the responsibility of maintaining and updating the infrastructure from the users to the cloud providers, who have the expertise and resources to ensure the availability and performance of the services. This frees up the users from the hassle and cost of hiring and training IT staff, and allows them to focus on their core business functions.

- 📈 On-demand scalability: Cloud computing can dynamically allocate and deallocate resources according to the changing needs of the users. Scaling up or down can be done in minutes, without any upfront or sunk costs, or any waste of resources.

- 📶 High reliability: Cloud computing is resilient to failures, outages, and disasters, as it leverages multiple redundant servers, storage, and networks across different locations. This ensures that the services are always available and the data is always backed up and recoverable.

- 🛡 Strong security: Cloud computing is protected by various security measures, such as encryption, firewalls, authentication, and authorization, that safeguard the data and applications from unauthorized access and manipulation. Cloud providers also adhere to the highest standards and regulations of data privacy and security, and offer various tools and services to help the users comply with them.

For example: a small e-commerce company 🛍

Imagine the same company that runs an e-commerce website on the cloud. The company only pays for the resources it actually uses, and can scale up or down depending on the demand. The company does not have to worry about buying, installing, or maintaining any hardware or software, as the cloud provider takes care of that. The company can also leverage the cloud provider's expertise and tools to optimize its performance, security, and customer experience.

Cloud Computing: Zooming in on the Benefits

You have seen that cloud computing offers many benefits over traditional IT solutions like on-premise infrastructure. In this section, we will zoom in on three of the most important benefits: on-demand scalability, high reliability, and strong security.

📈 On-demand scalability/elasticity

One of the main advantages of cloud computing is its ability to adapt to the changing needs of the users. Users can access and use as much or as little computing resources as they need, without having to worry about the capacity or the cost of the infrastructure. Cloud computing can dynamically allocate and deallocate resources according to the demand, using techniques such as load balancing.

Load balancing: Load balancing is a technique that distributes the workload among multiple servers, ensuring optimal performance and availability. Load balancing can be done at different levels, such as network, application, or data. Load balancing can use various algorithms, such as round-robin, least connections, or weighted. Load balancing can also use various methods, such as DNS, TCP, or HTTP. Load balancing can help cloud computing to handle high traffic, reduce latency, and prevent overload. Also check 📺 this video for more information.

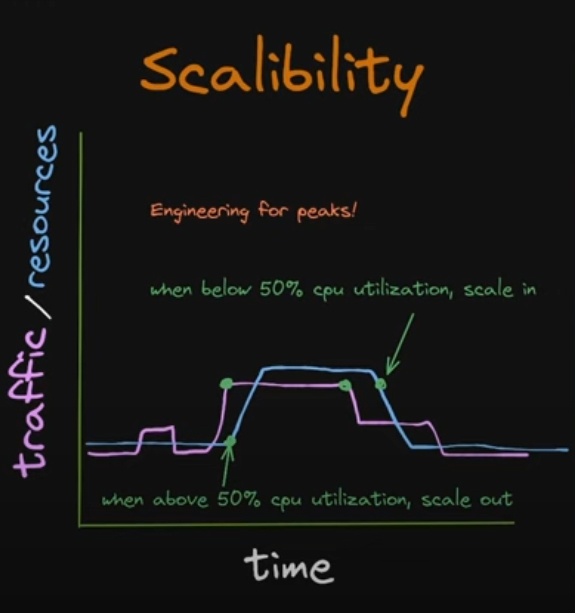

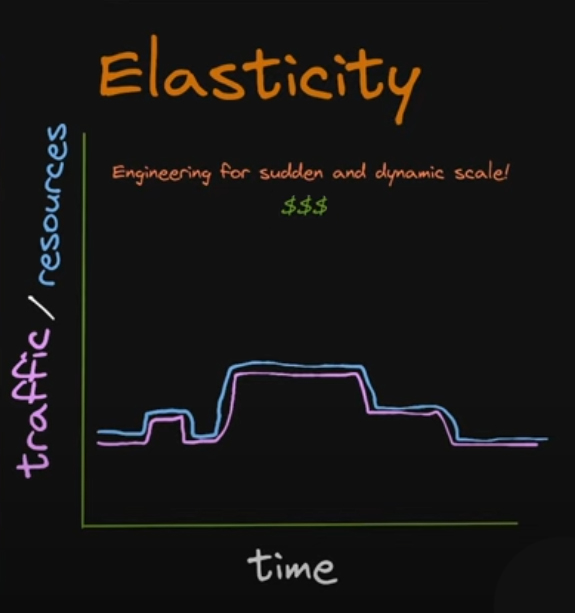

These techniques enable cloud computing to provide scalability or elasticity in minutes, without any upfront or sunk costs, or any waste of resources:

- Scalability: The ability of a system to accommodate larger or smaller workloads by adding or removing resources manually or programmatically. Scalability is less expensive to set up, but also less dynamic in being able to follow the increase and decrease of traffic. The infrastructure is set to react to peaks, using more rules or thresholds. When a certain limit of usage is crossed, more virtual servers are started up.

- Elasticity: The ability of a system to automatically adjust the amount of resources allocated to it closely following the current demand and traffic. Elasticity does that dynamically and faster, using more automatic monitoring. It aims to optimize resource utilization and cost efficiency by adapting to demand fluctuations.

Also check out 📺 this video for more explanation around these two terms. This video is also the source of the two graphs shown above.

📶 High reliability

Another key advantage of cloud computing is its ability to ensure the continuity and the quality of the services. Users can rely on cloud computing to provide consistent and uninterrupted access to their data and applications, even in the event of failures, outages, or disasters. This is collected in two terms:

- High availability (HA): High availability is a feature that ensures that the services are always available and minimizes the downtime. High availability can be achieved by using multiple redundant servers, storage, and networks. High availability can help cloud computing to maintain the business continuity (BC), i.e. keep the user's business running 100% of the time. One of the techniques is geo-redundancy, where the content of a datacenter is duplicated to another datacenter at least 200km away, as talked about by Centron, a German cloud provider.

- Disaster recovery (DR): Disaster recovery is a process that ensures that the data is always backed up and recoverable in the event of massive failures or disasters: "What if a plane crashes on your datacenter?✈🔥" Disaster recovery can be achieved by using multiple redundant servers, storage, and networks, but now also across different locations, which are also known as availability zones or regions. This is nicely illustrated by AWS' regions and availability zones map.

Also check out 📺 this video for more explanation around HA/DR reliability in Cloud vs. On-premise.

🛡 Strong security

A third major advantage of cloud computing is its ability to protect the data and the applications from unauthorized access and manipulation. Users can trust cloud computing to safeguard their information and assets, using various security measures, such as encryption, firewalls, authentication, and authorization:

- Encryption converts the data into an unreadable form, that can only be decrypted with a key.

- Firewalls filter the incoming and outgoing traffic, blocking or allowing it based on predefined rules.

- Authentication verifies the identity of the users, using methods such as passwords, tokens, or biometrics.

- Authorization determines the level of access to files and applications that the users have, using policies and roles.

These measures enable cloud computing to provide confidentiality and integrity of the data and the applications, which are the core principles of information security:

- Confidentiality: Confidentiality is a principle that ensures that the data is only accessible to authorized parties, and prevents unauthorized access and disclosure.

- Integrity: Integrity is a principle that ensures that the data is only modified by authorized parties, and prevents unauthorized modification and corruption.

Cloud providers also adhere to the highest standards and regulations of data privacy and security, such as GDPR and HIPAA, and offer various tools and services to help the users comply with them, such as encryption keys, audit logs, and compliance reports.

Cloud providers: Service and Delivery

Service models

Whether it is 🌎 Public cloud or 🎩 Private Cloud, cloud providers offer various service models that define the type and level of computing resources and capabilities that are provided to the users.

We say service models because we see this as IT as a Service.

Think of buying a Spotify subscription, you only need to provide your own ability to connect to the app and the rest is included in the service: hosting of the songs, updates to the application, licences and royalties of the songs, ... In the same way IT, like a virtual server, can be offered as a service. You only need to make sure you can connect over the internet and do whatever you want with the server, the rest is included in the service: power, storage, updates, security, backups, ...

When we look at cloud computing specifically, the main IT as a service models are

- 🗄 Infrastructure as a Service (IaaS): IaaS is the most basic service model, where users can access and manage raw computing resources, such as servers, storage, networks, and operating systems. Users have full control and responsibility over these resources, and can configure them as they wish. IaaS is suitable for users who need high customization and scalability, and have the technical skills and resources to manage their infrastructure.

- 📐 Platform as a Service (PaaS): PaaS is the next level of service model, where users can access and manage pre-configured computing platforms, such as databases, web servers, development tools, and middleware. Users do not have to worry about the underlying infrastructure, and can focus on developing and deploying their applications. PaaS is suitable for users who need rapid and easy application development and deployment, and have the development skills and resources to manage their platforms.

- 🖥 Software as a Service (SaaS): SaaS is the highest level of service model, where users can access and use ready-made software applications, such as email, office, CRM, and ERP. Users do not have to worry about the infrastructure or the platform, and can simply use the applications through a web browser or a mobile app. SaaS is suitable for users who need standard and simple application functionality, and have the business skills and resources to manage their applications.

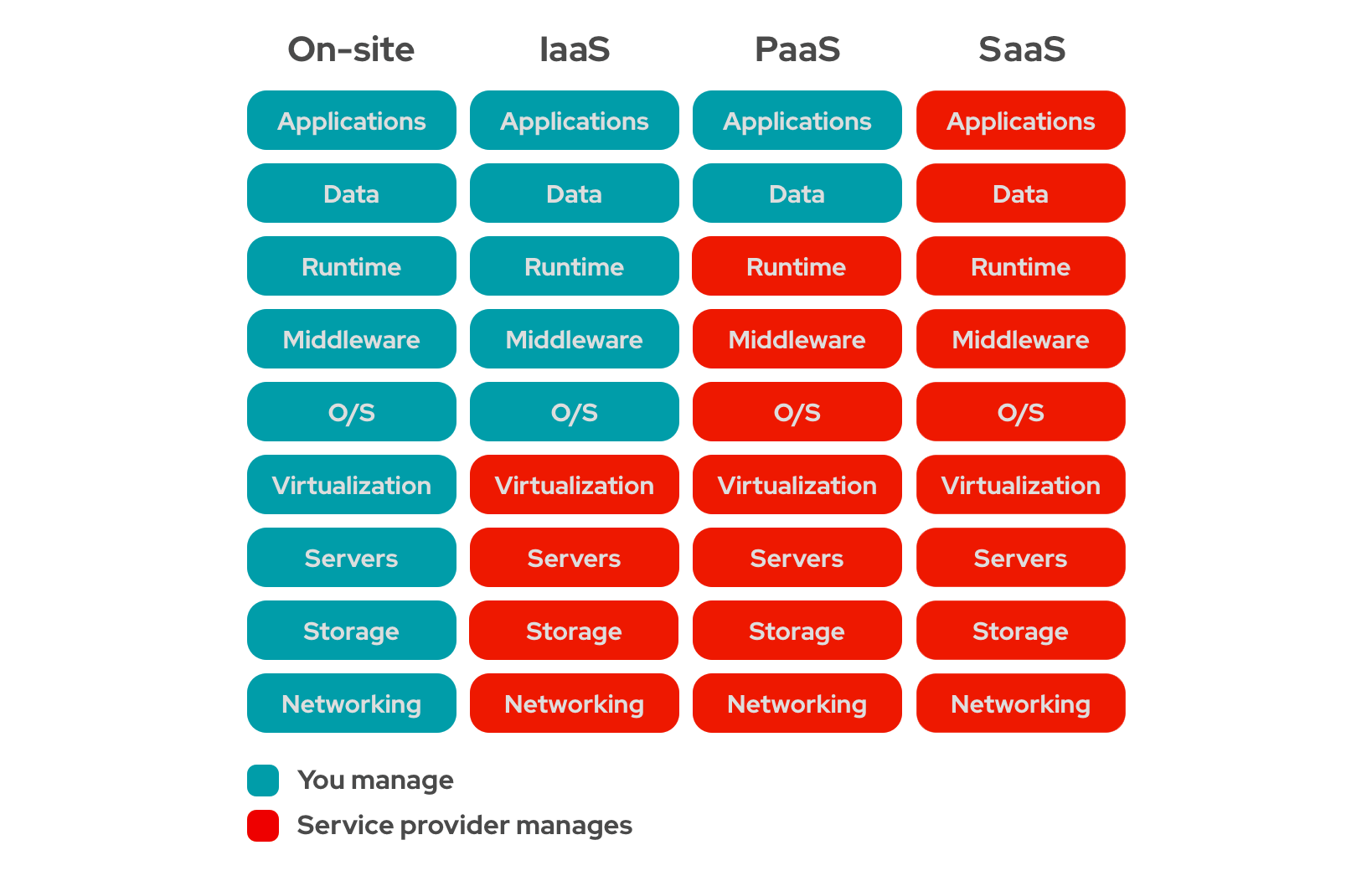

The service provider and the user each have different responsabilities in these models. IaaS puts a lot more responsability with the user than SaaS:

Also read what Belgian hosting company Combell has to say about the difference between these three, in English or Dutch. We will look at more examples of each of these services in the next chapter.

Cloud, the future

In the end, cloud computing can offer many advantages over on-premise infrastructure, and that is why it has become the dominant paradigm for modern IT. According to a recent report by Gartner, IT spending on public cloud services continues to rise unabated. In 2024, worldwide end-user spending on public cloud services is forecast to total $679 billion and is projected to exceed $1 trillion in 2027. The report also predicts that by 2028, cloud computing will shift from being a technology disruptor to becoming a necessary component for maintaining business competitiveness.