📝 After completing this theory chapter, you should be able to:

- Describe how hosting a small application in a VM can lead to inefficiencies and how a switch to containers could solve that

- Describe the basic structure of a container and what the main differences are to a VM

- Describe the role of the kernel in general and related to containers

- Describe the general evolution of containers in their history

- Explain how Containers and Container images relate to eachother

- Explain the role of Docker Hub

- Give the differences between a more regular Linux like Debian, and Alpine Linux

- Explain the role of processes in a Docker container

- Describe what happens if you run a 'docker run' command for the first time

- Describe the different Docker commands: run, start, stop, rm, ps, exec, attach and their flags (-it, -d, ...)

- Explain how ports work in relation to Docker containers and which problems can occur

The dawn of the container

What if there was a way to pack only the application code, dependencies, and runtime environment into small, isolated environments? This would make software deployment and management easier and more efficient...

What if there was a way to pack only the application code, dependencies, and runtime environment into small, isolated environments? This would make software deployment and management easier and more efficient...

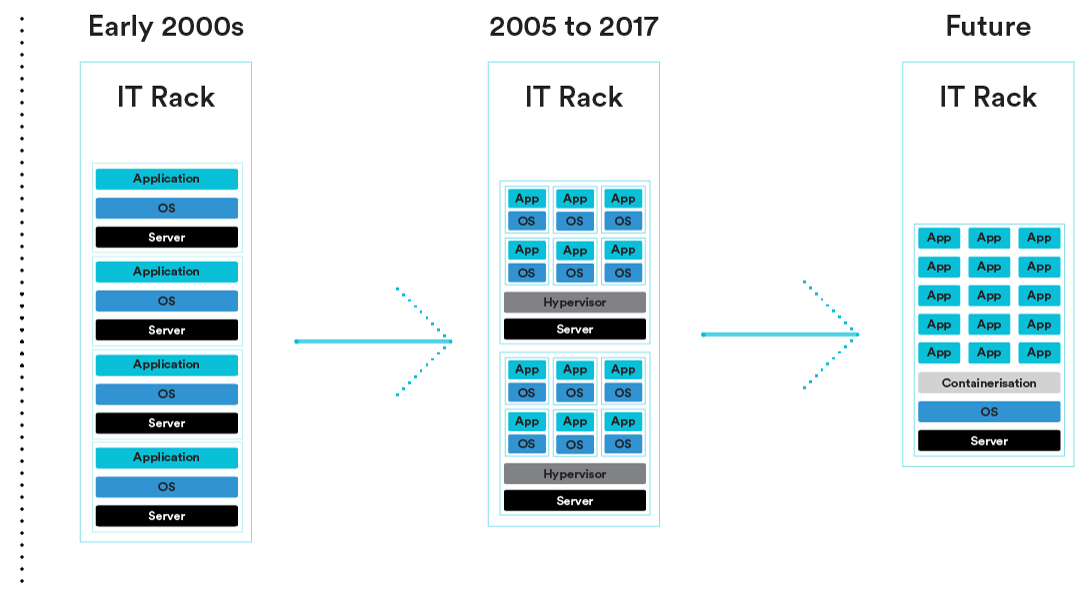

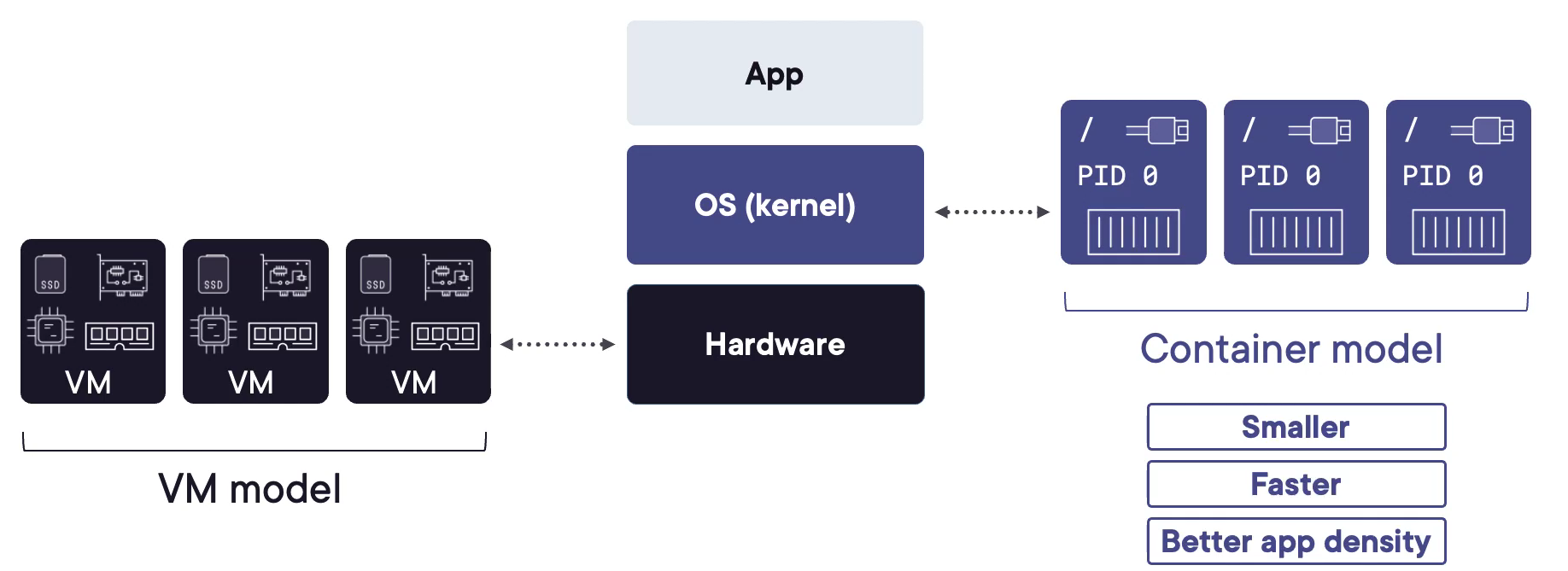

Imagine: A developer wants to deploy a collection of small or even tiny applications on a cloud platform like AWS or Azure. Each small appliction has a specific function, such as managing user authentication or processing transactions. The developer might use virtual machines to host these small applications separately, giving a different VM to each service.

This above scenario could waste a lot of resources however. Virtual machines have a full operating system installation, taking up a lot of storage and CPU resources. Running multiple VMs, each with its own operating system, would use more resources than the microservices need. This would be inefficient and costly.

But there is a solution that lets the developer use resources more efficiently by running multiple virtualized applications on one host, with much less problems with performance or scalability. This would make deployment and management faster and easier, and avoid problems with updates and compatibility.

That solution is containers. These small environments have only the essentials: the application code and dependencies. Unlike virtual machines, containers share the host's kernel and resources, reducing overhead and increasing efficiency.

With containers, the developer can deploy each application in its own small, isolated environment, using resources optimally without extra bloat. Updates can be done smoothly, and compatibility issues are gone.

Basic structure of a container

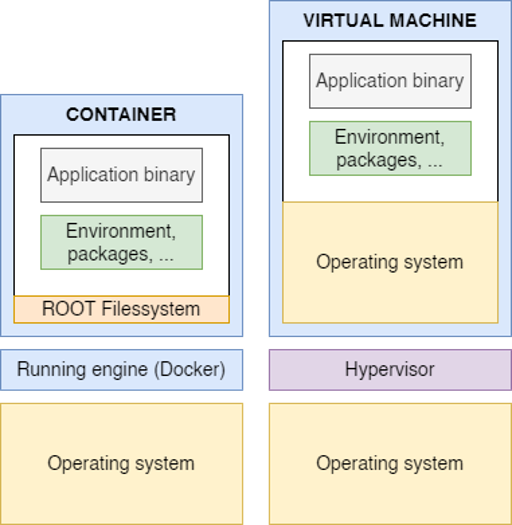

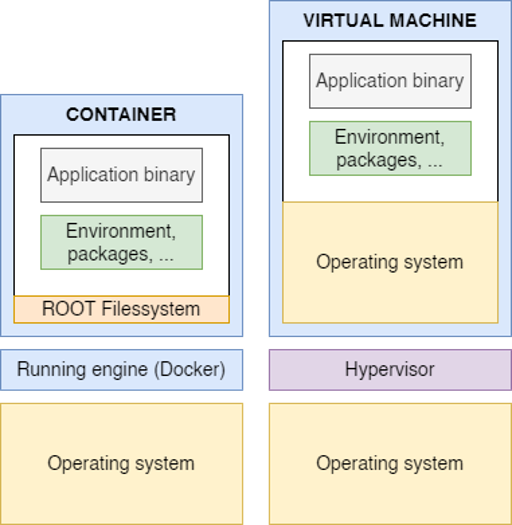

A container is in essence virtualisation that bundles together everything that an application needs to run in a consistent and isolated way. The best way to start with this is looking at the differences between a VM and a container.

Only the filesystem instead of the entire OS

A VM has a complete OS, including its own kernel. It also has its own drivers, libraries, and applications that run on top of the kernel. A VM’s file system is the way these files and directories are organized and stored on the disk. This file system is usually formatted with a specific file system type, such as NTFS, FAT, or EXT4, depending on the OS.

What is a Kernel?

A kernel is the core component of an operating system that manages the communication between the hardware and the software of a computer. It is responsible for tasks such as memory management, process scheduling, disk management, and system calls.

A container, on the other hand, does not have its own kernel or drivers. It shares the kernel of the host OS, which is the OS that runs the container engine.

A container only has the user mode portion of an OS, mainly the filesystem, which is based on a base image. This is a pre-built template that provides the basic OS and tools for the container. It can be customized and extended by adding or modifying files and directories, which is what one does when they place their appplication in the container. However, this also means that the containers are limited by the host operating system and cannot run different or incompatible operating systems.

Supported by a Container engine instead of a Hypervisor

A container engine and a hypervisor are both software processes that enable virtualization, which is the technology that allows running multiple isolated environments on a single physical machine. However, they differ in the level and the method of virtualization.

A container engine, such as Docker or LXC, virtualizes the operating system and allows running multiple containers on top of it. It deploys and manages the containers, and provides them with the necessary resources, such as CPU, memory, and network. A container engine also shares the kernel of the host operating system with the containers, which means that the containers can run faster and use less resources than a full operating system.

A container engine, such as Docker or LXC, virtualizes the operating system and allows running multiple containers on top of it. It deploys and manages the containers, and provides them with the necessary resources, such as CPU, memory, and network. A container engine also shares the kernel of the host operating system with the containers, which means that the containers can run faster and use less resources than a full operating system.

A hypervisor, such as ESXi, KVM, or Hyper-V, virtualizes the hardware and allows running multiple virtual machines (VMs) on top of it. It creates and runs the VMs, and provides them with virtual resources, such as virtual CPU, virtual memory, and virtual disk. A hypervisor also isolates the VMs from each other and from the host operating system, which means that the VMs can run different or incompatible operating systems and have more security and flexibility than containers. However, this also means that the VMs are heavier and slower than containers and use more resources than the actual hardware.

So, to summarize, the main difference between a container engine and a hypervisor is that a container engine virtualizes the operating system and runs containers, while a hypervisor virtualizes the hardware and runs virtual machines.

Overall structure of a container

- The file system, as talked about before, is the structure and organization of the files and directories in the container. The file system contains the application code, the dependencies, the configuration files, and any other data or files that the application needs to run. The file system is usually based on a base image, which is a pre-built template that provides the basic operating system and tools for the container. The base image can be customized and extended by adding or modifying files and directories, which is what one does when they place their appplication in the container.

- The application itself, which is the executable or script that runs the main logic and functionality of the container. It can be written in any programming language or framework, as long as it can run on the host kernel and the container engine. The application can also communicate with other containers or external services, using standard protocols and interfaces, such as HTTP, TCP, or REST.

- The dependencies, which are the libraries, modules, packages, or other components that the application relies on to run. The dependencies can be installed or copied into the container file system, or they can be referenced from external sources, such as repositories or registries. They should also be compatible with the base image and the application, and should be kept up-to-date and secure. These dependencies can include:

- system libraries, such as openssl

- application-specific installations and libraries, such as Python.

- other tools or utilities that the application needs, such as git.

- A configuration, which is the set of parameters, variables, or options that define the behavior and settings of the container and the application as metadata. The configuration can be specified in the container image, the container engine, the environment variables, or the command-line arguments. It can include information such as the port number, the log level, a database connection string, or an environment name. The configuration should allow the container be flexible and adaptable, allowing the container to run in different scenarios and environments, such as development, testing, or production.

History of isolation, lightweight virtualisation and business value

The history of containers in IT is a tapestry woven with innovation and evolution.

Innovation in isolation and lightweight virtualisation

dating back to the early 2000s when technologies like Solaris Containers and FreeBSD Jails pioneered the concept of isolated environments for running applications. Linux-VServer marked a significant milestone in 2001, becoming the first project to implement container technology on Linux, enabling multiple virtual servers to operate on a single host with enhanced independence and security. However, Linux-VServer's reliance on a patched kernel posed challenges in maintenance and distribution.

In 2008, LXC (Linux Containers) emerged as a pivotal solution, amalgamating cgroups (allows to allocate resources) and namespaces (allows to isolate resources for a set of processes) to deliver lightweight virtualization. Despite its early adoption, LXC struggled with usability and standardization.

In 2008, LXC (Linux Containers) emerged as a pivotal solution, amalgamating cgroups (allows to allocate resources) and namespaces (allows to isolate resources for a set of processes) to deliver lightweight virtualization. Despite its early adoption, LXC struggled with usability and standardization.

The landscape drastically shifted in 2013 with the advent of Docker, a game-changer that streamlined container development, deployment, and management across diverse platforms. Docker's introduction of container images revolutionized portability and reusability, galvanizing a vibrant community of developers and users.

Innovation leading to business value

One of the key business drivers behind the development of containers was the need for greater efficiency and agility in software development and deployment. Containers offered a lightweight alternative to traditional virtual machines (VMs), significantly reducing resource usage and startup times. This efficiency translated into cost savings for businesses, enabling them to allocate resources more effectively and scale their applications more efficiently.

Moreover, containers addressed the challenge of software inconsistency across different environments. By encapsulating applications and their dependencies into portable, self-contained units, containers ensured consistency from development to production, minimizing the risk of deployment errors and streamlining the release process.

The momentum continued in 2014 with the release of Kubernetes, an open-source juggernaut for automating containerized application orchestration. Born from Google's experience managing massive container workloads, Kubernetes offered a robust framework for scaling and managing containers across clusters, complete with essential features like service discovery and load balancing.

The momentum continued in 2014 with the release of Kubernetes, an open-source juggernaut for automating containerized application orchestration. Born from Google's experience managing massive container workloads, Kubernetes offered a robust framework for scaling and managing containers across clusters, complete with essential features like service discovery and load balancing.

Moreover, the establishment of the Open Container Initiative (OCI) in the same year marked a pivotal moment for standardization in the container ecosystem. The OCI's mission to develop open standards for container formats and runtimes fostered interoperability and innovation, ensuring consistency and compatibility across various platforms and tools. This development saved even more on time and costs.

The culmination of these advancements solidified containers as the major cloud providers started offering managed container services like Amazon Elastic Container Service (ECS), Google Kubernetes Engine (GKE) and Azure Kubernetes Service (AKS). Now containers are indispensable, often serving as the underlying infrastructure for executing functions in many platforms.

The culmination of these advancements solidified containers as the major cloud providers started offering managed container services like Amazon Elastic Container Service (ECS), Google Kubernetes Engine (GKE) and Azure Kubernetes Service (AKS). Now containers are indispensable, often serving as the underlying infrastructure for executing functions in many platforms.